AI is reshaping healthcare by tackling cybersecurity risks and regulatory compliance challenges. With sensitive patient data at stake, healthcare organizations face increasing cyber threats and stringent regulations like HIPAA and HITRUST. AI tools offer solutions by automating compliance tasks, detecting security risks, and managing vast data volumes far beyond human capacity. Here's how AI is transforming healthcare:

- Cybersecurity: AI identifies threats, prevents breaches, and ensures data protection through encryption, real-time monitoring, and risk assessments.

- Regulatory Compliance: AI simplifies adherence to frameworks like HIPAA and HITRUST, automating audits, risk evaluations, and multi-factor authentication.

- Clinical Applications: From diagnostics to documentation, AI ensures data safety while improving efficiency in patient care and operational systems.

- Vendor Risks: Third-party AI vendors introduce security risks, requiring thorough assessments and ongoing monitoring to prevent breaches.

As AI adoption grows, healthcare organizations must integrate strong security measures, continuous monitoring, and vendor oversight to protect patient data and maintain compliance.

Regulatory Frameworks for AI in Healthcare

Understanding the regulatory landscape is essential for deploying AI in healthcare responsibly. While AI has revolutionized many aspects of the industry, it must navigate a complex web of federal regulations and pre-existing standards that were not initially designed with AI in mind. This creates potential gaps in addressing AI-specific risks, making compliance a crucial part of protecting patient data and ensuring smooth operations.

HIPAA, HITECH, and Data Privacy Requirements

HIPAA remains the gold standard for safeguarding healthcare data. AI systems must uphold the confidentiality and integrity of Electronic Protected Health Information (ePHI) by incorporating strong encryption, strict access controls, and detailed audit trails. AI-powered tools can also bolster compliance by enabling real-time monitoring and incident response, which aligns with HIPAA's continuous oversight requirements.

Recent updates to the HIPAA Security Rule have raised the bar, removing "addressable" specifications and mandating multi-factor authentication and encryption. Organizations now have a 240-day window to implement these changes. Additionally, AI systems should be equipped with comprehensive audit capabilities to track user interactions with sensitive data, which is a critical element of regulatory adherence.

HITRUST Certification Standards

HITRUST provides a certifiable framework that consolidates various regulatory requirements, making it particularly useful for healthcare organizations juggling multiple compliance obligations. Unlike HIPAA, which sets foundational standards, HITRUST offers a more integrated, risk-based approach. For AI deployments, achieving HITRUST certification not only signals a high level of security readiness but also simplifies vendor assessments and supports ongoing threat evaluations.

FDA and NIST Guidelines for AI and Machine Learning

The U.S. Department of Health and Human Services (HHS) leans on the NIST AI Risk Management Framework to guide its approach to AI oversight. This framework informs internal assessments for high-impact AI applications and is embedded in the Authority to Operate (ATO) security review process, ensuring AI projects undergo continuous monitoring even after deployment.

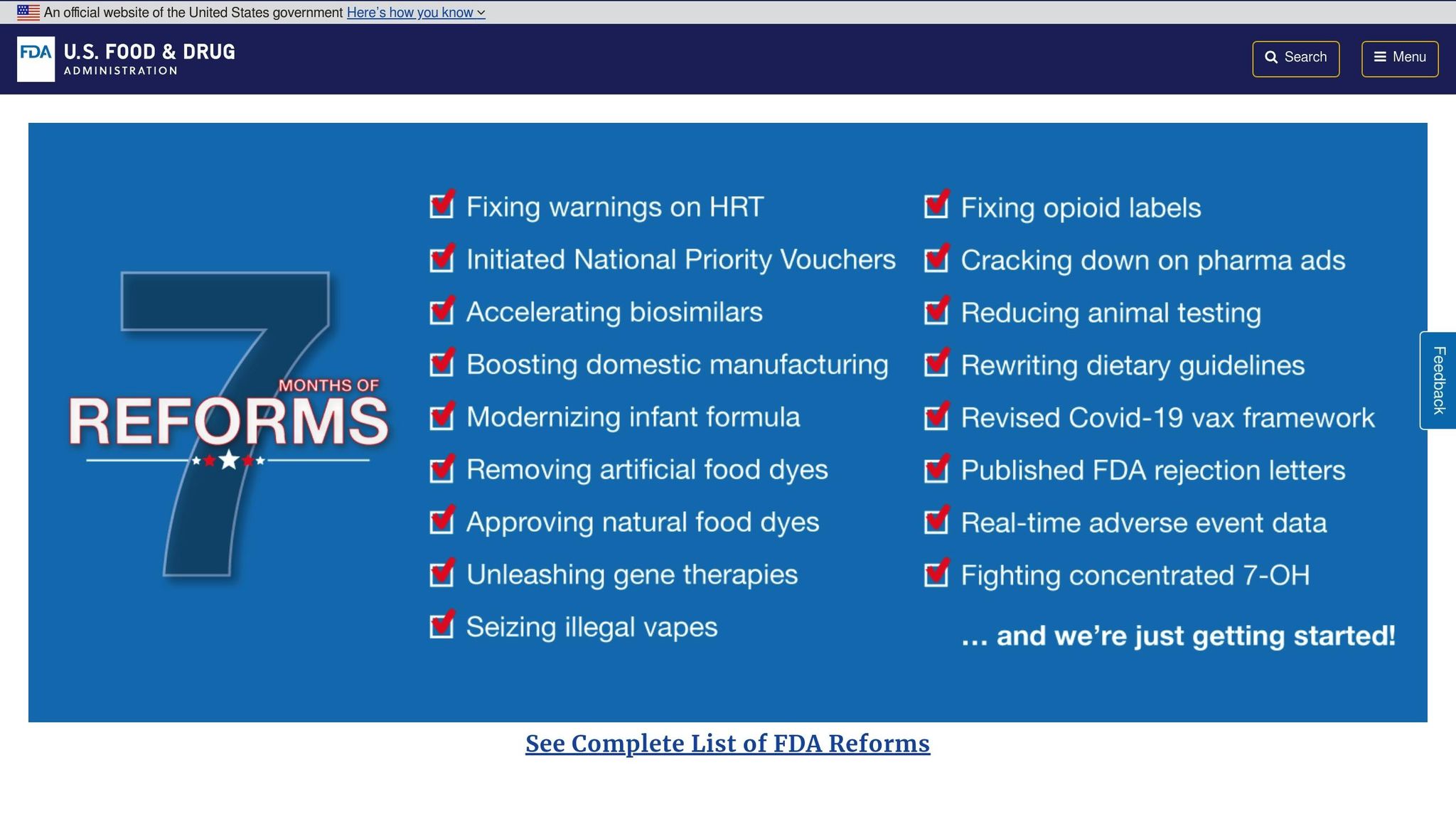

On December 1, 2025, the FDA introduced an "agentic AI" platform designed to streamline regulatory meetings and pre-market product reviews while maintaining human oversight. However, despite these advancements, regulatory gaps persist. For instance, the FDA's proposed Section 524B requirements for medical device cybersecurity only partially address AI-specific risks like adversarial attacks or data poisoning.

The urgency of addressing these gaps is underscored by the ECRI Institute, which has identified AI as the top health technology hazard for 2025. Furthermore, HHS reported 271 active or planned AI use cases in fiscal year 2024, with a projected 70% increase in new use cases for FY 2025. This rapid growth highlights the pressing need for more comprehensive governance frameworks to manage AI risks effectively.

These evolving regulations and standards lay the groundwork for examining specific AI use cases and the unique compliance challenges they present in healthcare. Up next, we’ll dive into these use cases and explore how AI is shaping the future of healthcare security.

AI Use Cases and Compliance Requirements in Healthcare

AI is reshaping healthcare, but every new application comes with its own set of compliance hurdles. In 2024 alone, there were over 700 healthcare data breaches involving 500 or more individuals. This highlights how critical it is for healthcare organizations to secure AI systems while staying compliant with regulations. By aligning with the regulatory frameworks and security measures discussed earlier, AI can be integrated into healthcare in a way that prioritizes both innovation and compliance. Below, we’ll dive into how these measures apply to clinical tools, operational systems, and generative AI.

Clinical AI Tools and Patient Data Protection

AI tools used in diagnostics, imaging, and remote monitoring process large volumes of Protected Health Information (PHI). This makes safeguarding data a top priority. These systems should use AES-256 encryption for data at rest and TLS/HTTPS protocols during transmission to block unauthorized access. But encryption is just the start - advanced features like algorithm audits and tamper-evident logging can be embedded directly into clinical workflows to enhance security.

One standout method is the use of cryptographic frameworks like Meta-Sealing. This technology creates tamper-proof records of every AI action and data transformation, ensuring decisions are both traceable and auditable. Such measures align with HIPAA and emerging guidelines like FUTURE-AI, which focus on explainability and traceability.

AI can also improve how data is anonymized and de-identified. Research shows that with as few as 15 data points, nearly every American can be identified. AI-powered segmentation tools can automatically flag highly sensitive data, such as records governed by 42 CFR Part 2, replacing time-consuming manual processes. Comprehensive audit trails that log every interaction with sensitive data further bolster compliance.

This rigorous approach to data protection isn’t limited to clinical tools. Operational systems, which handle financial and administrative data, also require equally stringent measures.

AI in Revenue Cycle Management and Operations

Revenue cycle management (RCM) systems - like AI-driven tools for coding, billing, and prior authorizations - process both financial and medical data, placing them in a high-risk category. These systems must adhere to the technical controls outlined in the updated HIPAA Security Rule.

To mitigate risks, RCM systems should enforce strict access controls and use continuous network monitoring to identify and address threats. Clear policies must define who can access PHI and under what conditions, ensuring only authorized personnel handle sensitive data.

Billing and payment systems also need robust risk management strategies to address vulnerabilities like algorithmic biases or data poisoning. A Governance, Risk, and Compliance (GRC)-focused approach helps organizations pinpoint these risks. Regular audits of both AI outputs and access logs can catch compliance issues early, preventing them from escalating.

Now, let’s look at how generative AI fits into clinical workflows while maintaining compliance.

Generative AI in Clinical Workflows

Generative AI tools, used for tasks like patient communication, clinical documentation, and administrative work, bring their own compliance challenges. Issues like data leakage and unauthorized disclosures are particularly concerning. To combat this, these systems must implement strict input and output controls to ensure PHI isn’t accidentally shared with unauthorized parties or used to train external AI models.

One effective strategy is deploying an AI gateway. This centralized control point manages access, monitors data flows, and enforces security policies. It provides healthcare organizations with a way to track exactly what information is being processed by the AI system, adding an extra layer of protection.

Staff training is another critical piece of the puzzle. Healthcare professionals need to understand the privacy risks associated with generative AI and learn best practices for maintaining security. AI literacy programs can help bridge this gap. Organizations should also establish clear policies on how AI tools handle PHI and provide regular updates to address new risks as generative AI evolves. With these measures in place, healthcare providers can safely integrate generative AI while maximizing its potential benefits.

Managing AI Cybersecurity Risks in Healthcare

AI Cybersecurity Risks in Healthcare: 2024-2025 Statistics and Threats

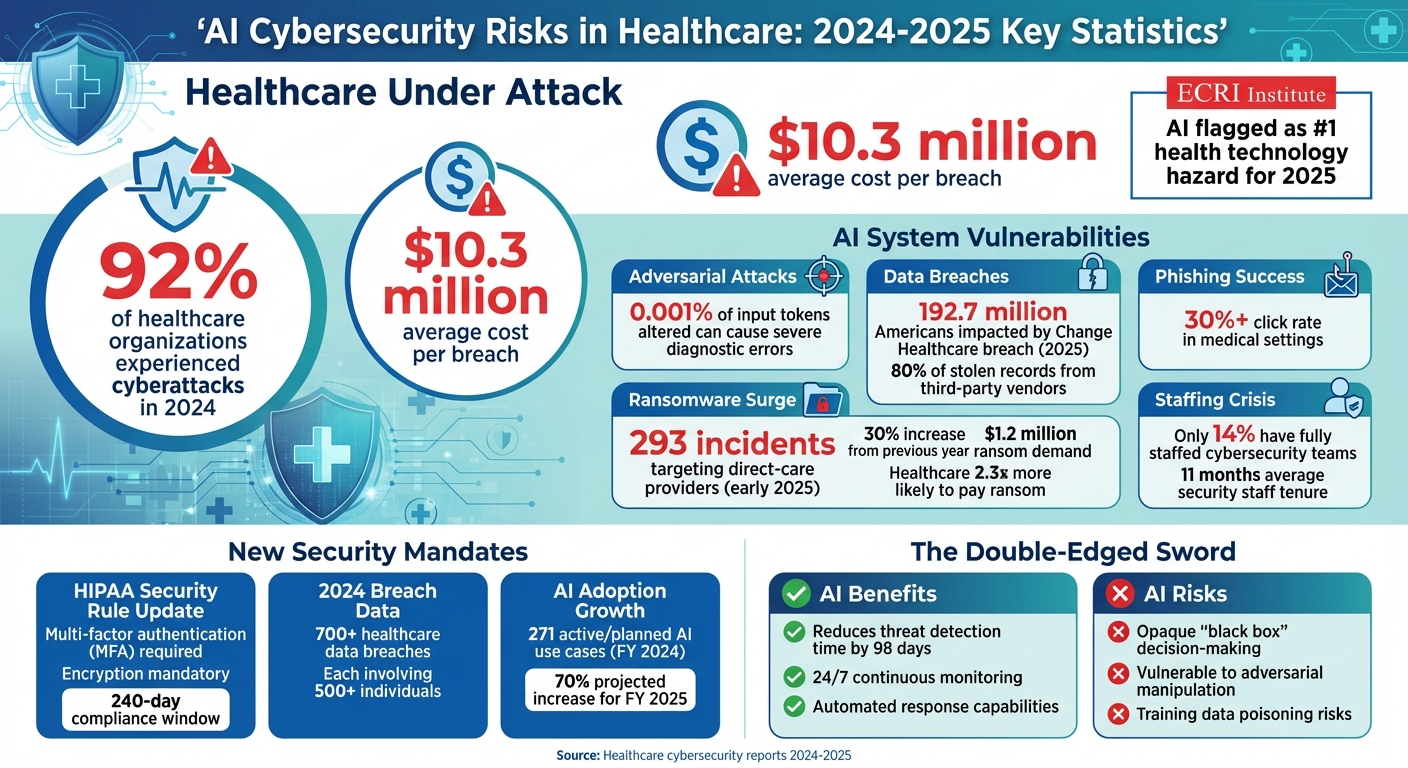

The integration of AI in healthcare has introduced a new wave of cybersecurity challenges. In 2024, a staggering 92% of healthcare organizations reported experiencing cyberattacks, with the average cost of a breach reaching $10.3 million. The ECRI Institute has flagged AI as the top health technology hazard for 2025, citing its potential for catastrophic failures. These risks directly threaten patient safety and the stability of healthcare organizations. Below, we delve into these threats and explore the measures needed to address them.

AI-Related Security Threats

The vulnerabilities tied to AI systems in healthcare underline the need for robust security measures to ensure compliance and safeguard operations.

Adversarial attacks are a major concern. Research indicates that altering just 0.001% of input tokens can lead to severe diagnostic errors in AI systems. For example, an attacker could subtly manipulate imaging data or lab results, causing the AI to misdiagnose a condition or recommend inappropriate treatment.

Model poisoning is another threat, targeting the training phase of AI models. By injecting malicious data into training datasets, attackers can compromise the integrity of the model at its core. This is particularly alarming in healthcare, where AI models often rely on data from multiple sources. Without cryptographic verification and detailed audit trails, organizations cannot ensure that their models are built on clean, reliable data.

Data breaches continue to escalate, with external sources becoming the primary culprits. For instance, the Change Healthcare breach in 2025 impacted 192.7 million Americans due to a single vendor compromise. Alarmingly, 80% of stolen patient records now originate from third-party vendors, not the hospitals themselves. This shift highlights the importance of scrutinizing vendor security alongside internal systems.

Phishing and social engineering tactics have evolved to exploit AI vulnerabilities. In medical settings, targeted phishing campaigns achieve click rates exceeding 30%. Once attackers gain access, they can steal training data, manipulate AI outputs, or disrupt operations. The rise of remote work and telehealth has only expanded these risks.

Ransomware attacks have surged, with 293 incidents targeting direct-care providers in early 2025 alone - a 30% increase from previous years. Average ransom demands have climbed to $1.2 million, and healthcare organizations are 2.3 times more likely to pay the ransom compared to other industries. When ransomware locks AI systems, the fallout can halt clinical operations and compromise patient care.

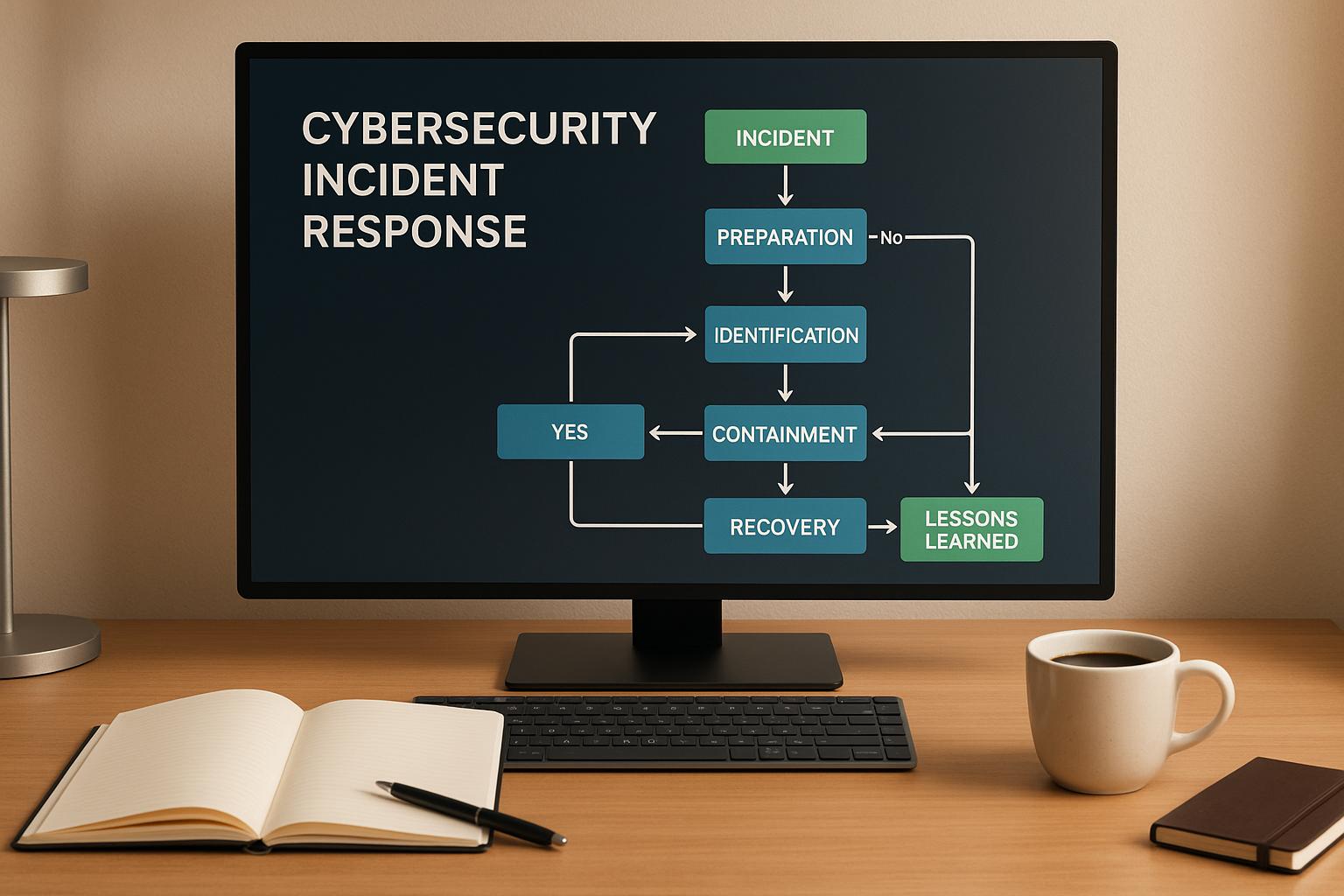

Security Controls for AI Systems

Implementing strong security measures is critical to both meeting updated HIPAA requirements and addressing the unique challenges posed by AI.

The revised HIPAA Security Rule now requires multi-factor authentication (MFA) and encryption, with a 240-day compliance window for existing business associate agreements. MFA helps prevent unauthorized access, even if credentials are stolen, while encryption protects sensitive data during storage and transmission.

Securing AI training pipelines demands a layered approach. Organizations should verify training data sources using cryptographic methods, maintain detailed audit trails for all data changes, and keep training environments separate from production systems. This separation ensures that even if a training environment is compromised, operational AI systems remain unaffected.

Validation frameworks play a crucial role in defending against AI-related threats. These frameworks rigorously test models for vulnerabilities, including adversarial manipulation. Conducting regular adversarial testing - where systems are intentionally challenged - can uncover weaknesses before attackers exploit them.

The Health Sector Coordinating Council (HSCC) is set to release 2026 guidance on AI cybersecurity. This will cover areas such as education, cyber defense, governance, secure-by-design principles, and third-party risk management. Healthcare organizations should prepare to align their security practices with this forthcoming guidance.

Finally, oversight and governance are essential. Clear structures should define responsibilities for AI security, risk assessments, and protocols for addressing vulnerabilities. These efforts must also adhere to civil rights and privacy laws, ensuring that ethics and transparency remain at the forefront.

Benefits and Risks of AI for Cybersecurity

AI in healthcare cybersecurity is a double-edged sword. While it offers powerful defense capabilities, it also introduces new vulnerabilities. Balancing these aspects is key, as shown in the table below:

| AI Benefits for Cybersecurity | AI Risks for Cybersecurity |

|---|---|

| Faster threat detection: AI can reduce incident identification time by 98 days, enabling quicker responses. | Opaque decision-making: Many AI models function as "black boxes", making it hard to understand their decisions. |

| Continuous monitoring: AI analyzes network traffic and user behavior 24/7, spotting anomalies humans might miss. | Adversarial manipulation: Attackers can craft inputs to trick AI, leading to missed threats or false positives. |

| Automated response: AI can isolate compromised systems and block malicious traffic without human intervention. | Data dependency: Large datasets are essential for AI, raising privacy and storage concerns. |

| Pattern recognition: AI detects subtle patterns across vast datasets, identifying sophisticated attacks. | Training vulnerabilities: Poisoned training data can compromise AI models from the start. |

| Scalability: AI can protect growing networks without needing proportional increases in staff. | Third-party risks: External vendors providing AI tools can introduce supply chain vulnerabilities. |

The healthcare sector faces a critical challenge: only 14% of organizations have fully staffed cybersecurity teams, and the average tenure for security staff is just 11 months. While AI can help bridge this gap, it’s not a standalone solution. Combining AI tools with skilled human oversight ensures systems are continually monitored, validated, and improved.

sbb-itb-ec1727d

Third-Party AI Vendors and Outsourced Compliance Support

Third-party vendors pose a significant cybersecurity challenge in healthcare, with 80% of breaches originating from business associates. AI vendors, in particular, amplify these risks due to their access to sensitive data. Often, these vendors operate with smaller security budgets and less stringent controls compared to the organizations they serve.

Third-Party AI Vendor Risk Management

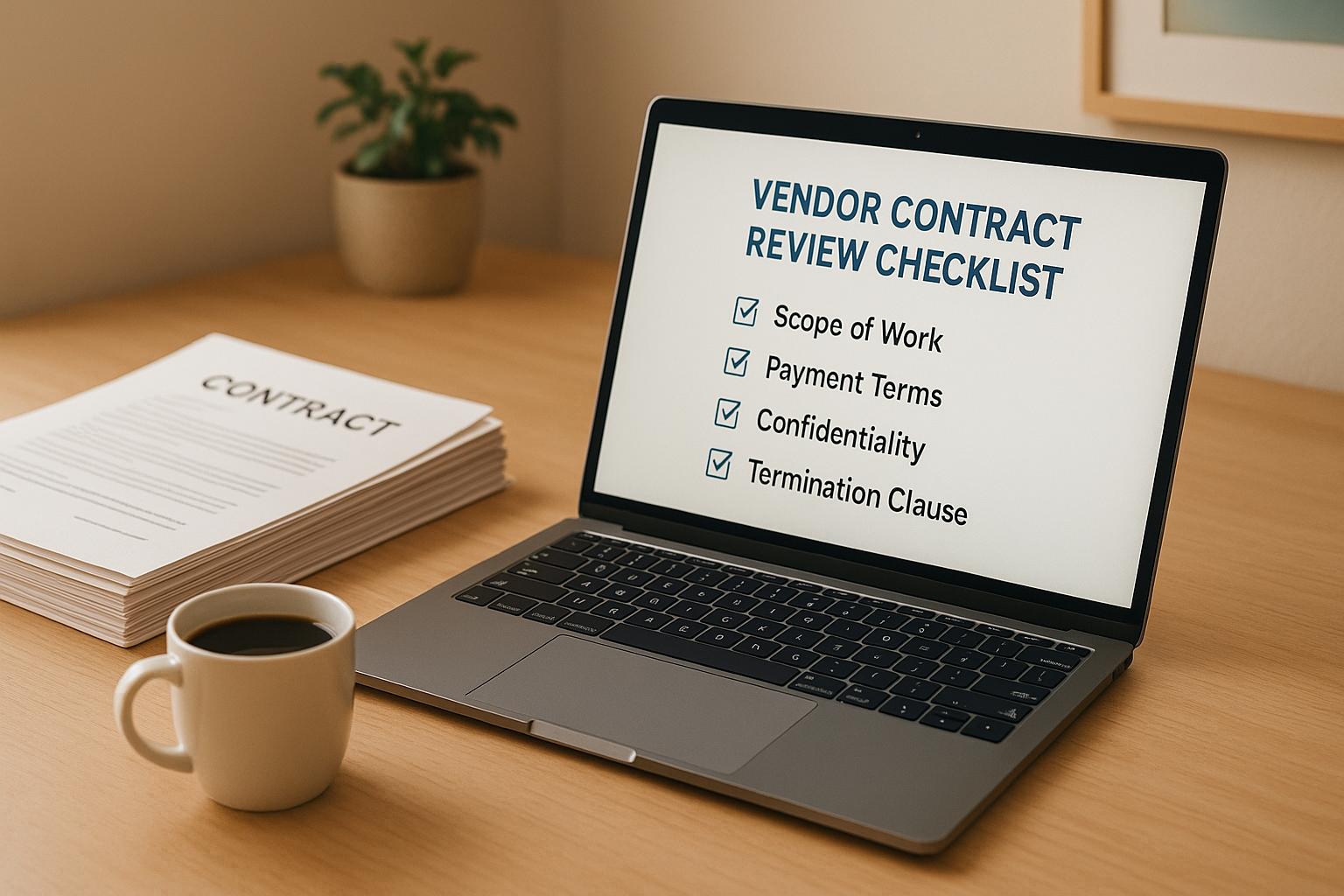

Managing risks associated with AI vendors requires more than standard contracts. Issues like model transparency, data ethics, and algorithmic bias demand a tailored approach. However, organizations often struggle with fragmented review processes, limited technical expertise, and unclear accountability.

To mitigate these risks, adopt a framework that prioritizes model transparency, continuous monitoring, robust data governance, bias testing, and seamless integration with existing governance structures. Before onboarding an AI vendor, conduct thorough risk assessments to ensure their security practices align with your standards and that they can securely manage your data. Contracts should mandate measures like multi-factor authentication and AES-256 encryption.

Oversight doesn’t end once the contract is signed. In 2024 alone, the healthcare sector experienced 444 cyber incidents, including 206 data breaches and 238 ransomware attacks. Regular audits and risk assessments of AI vendors can uncover vulnerabilities early, reducing the likelihood of breaches. Additionally, upcoming guidance from the Health Sector Coordinating Council in 2026 will focus on third-party risks and supply chain transparency, providing actionable steps for vendor oversight. This approach aligns closely with the broader AI cybersecurity framework, creating a unified strategy for managing internal and external risks.

How Embedded Compliance Teams Support AI Adoption

Many healthcare organizations lack the resources to effectively manage AI vendor risks and ensure continuous audit readiness. Embedded compliance teams step in to fill this gap, acting as an extension of the organization and handling tasks such as vendor risk management and ongoing compliance upkeep.

These teams conduct vendor security assessments, manage business associate agreements, implement essential controls like multi-factor authentication and AES-256 encryption, and maintain continuous evidence collection for audits. For example, in February 2025, a leading surgical robotics company partnered with an embedded compliance provider to secure its cloud-based video processing pipeline. This collaboration enabled AI-enhanced threat detection, reduced security incident response times by 70%, and ensured HIPAA-compliant cloud security.

Conclusion: Creating an AI Compliance Plan for Healthcare

Key Points for Healthcare Leaders

AI is transforming how healthcare operates, but it also brings serious privacy and security concerns. A striking 92% of organizations reported cyberattacks in 2024, yet only 41% feel confident that their current cybersecurity measures adequately protect GenAI applications. This gap highlights the urgent need for stronger safeguards.

Moving forward, healthcare organizations must go beyond simply meeting HIPAA requirements. This means incorporating practices like algorithm audits, tamper-evident logging, and continuous monitoring into AI workflows. Vendor risk management is no longer optional - it’s essential. For organizations without the internal capacity to tackle these challenges, embedded compliance teams like Cycore can step in. These teams handle critical tasks such as vendor assessments, enforcing security controls (like multi-factor authentication and AES-256 encryption), and maintaining ongoing audit readiness. This allows your team to focus on innovation while staying compliant.

Next Steps

To address these challenges effectively, start with a thorough gap analysis of your AI systems. Compare your current practices against HIPAA, HITRUST, and upcoming HSCC guidelines. From there, implement specific security measures to address risks like data poisoning, adversarial attacks, and opaque algorithms.

Continuous monitoring is key to maintaining a strong security posture and managing vendor risks. Update your business associate agreements to include detailed technical requirements, and develop risk scoring methods that account for data sensitivity, system criticality, and access levels. If managing these tasks in-house feels overwhelming, consider outsourcing compliance functions. This can include evidence collection, control implementation, and vendor oversight, allowing your team to stay focused on creating innovative products and driving growth.

FAQs

How does AI improve cybersecurity in healthcare?

AI is transforming cybersecurity in healthcare by making threat detection faster and more precise. With advanced algorithms, AI can continuously monitor network activities, spot unusual patterns, and even predict potential security breaches before they happen.

It also plays a crucial role in helping healthcare organizations adhere to strict regulations like HIPAA. By automating tasks such as vulnerability management, real-time monitoring, and keeping detailed audit logs, AI not only safeguards sensitive patient information but also minimizes the chances of expensive breaches and compliance issues.

What regulatory challenges arise when using AI in healthcare?

Implementing AI in healthcare isn't without its hurdles, particularly when it comes to regulations. Organizations must prioritize compliance with laws like HIPAA and HITRUST to ensure patient data remains private and secure. Beyond that, concerns around algorithm transparency and bias must be addressed, as these issues can directly influence fairness and erode trust in AI systems.

For healthcare providers operating internationally, the challenge grows with the need to adhere to frameworks like GDPR. Navigating these cross-border regulations adds another layer of complexity. To reduce risks, it's crucial to maintain ongoing validation, thorough oversight, and meticulous documentation. These steps not only protect patients but also help minimize legal exposure, all while enabling AI to enhance healthcare delivery.

What steps can healthcare organizations take to reduce risks when working with third-party AI vendors?

Healthcare organizations can better manage risks associated with third-party AI vendors by prioritizing strong cybersecurity protocols. This includes implementing tools like multi-factor authentication and data encryption to safeguard sensitive information. Keeping a close eye on vendor performance and ensuring adherence to regulations such as HIPAA and HITRUST are equally important.

Organizations should also put in place clear oversight mechanisms, perform detailed risk assessments, and regularly evaluate vendor compliance practices. These steps can help uncover potential weaknesses early and ensure that vendors consistently uphold the highest standards for protecting patient data.