The NIST AI Risk Management Framework (AI RMF), introduced in January 2023, provides a structured approach to managing AI risks. With AI investments exceeding $100 billion in 2024 and global regulations tightening, including the EU AI Act set for August 2026, compliance has become essential for businesses. This framework focuses on four key functions - Govern, Map, Measure, and Manage - helping organizations address risks like bias, security vulnerabilities, and transparency gaps.

Key takeaways:

The NIST AI RMF is a roadmap for creating safer, more accountable AI systems while aligning with fast-evolving regulations.

The 4 Core Functions of the NIST AI RMF

Understanding the Four Functions

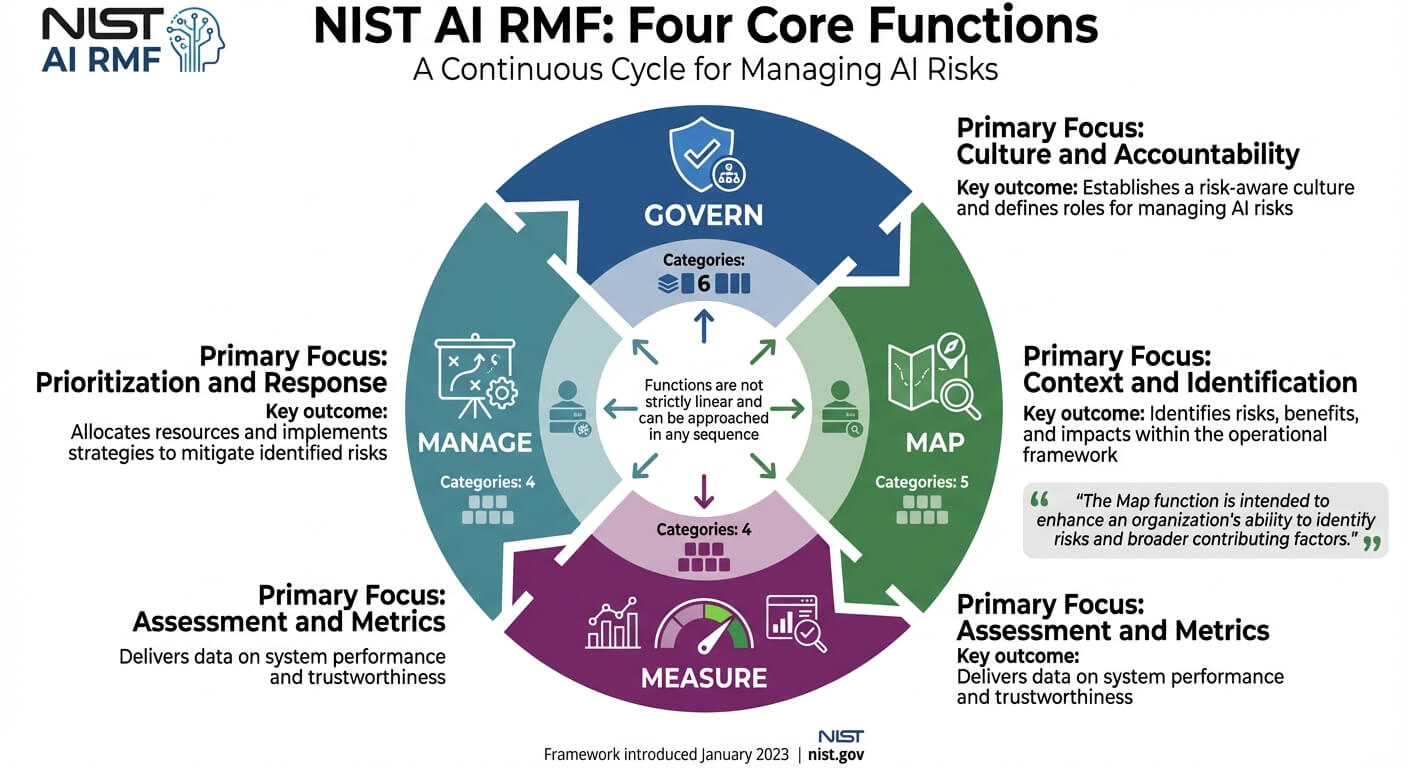

The NIST AI RMF Core is built around four interconnected functions: Govern, Map, Measure, and Manage. These functions work as a continuous cycle, guiding organizations through the various stages of an AI system's lifecycle.

Govern serves as the cornerstone, influencing all other functions by promoting leadership commitment and fostering a culture of accountability. Map focuses on defining the AI system's purpose, identifying its limitations, and assessing its potential effects on stakeholders. Measure uses both quantitative and qualitative tools to evaluate the risks uncovered during the mapping process. Finally, Manage prioritizes actions and crafts strategies to address the risks identified.

While many organizations start with Govern and then move to Map, the functions are not strictly linear. They can be approached in any sequence, with frequent cross-referencing between them. Each function is further divided into specific categories: Govern has 6, Map has 5, Measure has 4, and Manage has 4.

Function

Primary Focus

Key Outcome

Culture and Accountability

Establishes a risk-aware culture and defines roles for managing AI risks.

Context and Identification

Identifies risks, benefits, and impacts within the operational framework.

Assessment and Metrics

Delivers data on system performance and trustworthiness.

Prioritization and Response

Allocates resources and implements strategies to mitigate identified risks.

Let’s explore how the Govern function creates a foundation for leadership and accountability.

Govern: Building Leadership and Accountability

The Govern function positions AI risk management as a core responsibility of the organization. It requires senior leaders to take ownership of the entire AI lifecycle, combining technical expertise with ethical and societal considerations.

For example, in 2024, the U.S. Department of State utilized the NIST AI RMF to create its "Risk Management Profile for Artificial Intelligence and Human Rights." This profile offered actionable steps to align AI governance with international human rights principles, addressing risks like bias, surveillance, and censorship. Similarly, Workday adopted the framework by fostering collaboration across departments and ensuring leadership oversight to maintain ethical AI practices, evaluate risks, and assess third-party systems.

"Think of governance as the guardrails that allow you to drive faster, not the brakes that slow you down." – Keyrus

Effective governance starts with forming a cross-functional team, bringing together stakeholders from legal, compliance, data science, and business units. By 2026, nearly 60% of IT leaders plan to establish or update AI principles, with governance evolving from static policies to dynamic, ongoing processes. These include real-time monitoring of issues like model drift and bias. As organizations adopt more autonomous AI systems, governance is shifting toward machine-readable guardrails that can instantly detect and block non-compliant actions.

Map: Finding and Assessing AI Risks

The Map function lays the groundwork for understanding AI risks before a system is deployed. It involves creating a detailed inventory of AI systems, identifying stakeholders, and tagging risks based on factors like usage, data sensitivity, and potential harm.

"The Map function is intended to enhance an organization's ability to identify risks and broader contributing factors." – NIST AI RMF Core

A comprehensive AI inventory is the first step. This centralized registry should include all AI tools, from third-party vendor models to internal prototypes, ensuring no "shadow AI" operates without oversight. Once mapping is complete, organizations should have enough context to decide whether to move forward with the AI system’s design or deployment.

sbb-itb-ec1727d

How to Implement the NIST AI RMF

Getting Started with Implementation

Start by conducting a gap analysis to measure your current AI practices against the criteria outlined in the NIST AI RMF. This assessment will help you identify which controls are already in place and highlight areas that need attention. Assemble a cross-functional AI Oversight Committee that includes experts from data science, legal, ethics, cybersecurity, and risk management.

Set clear risk tolerance levels and integrate AI risk management into your corporate governance framework. A risk matrix can help you evaluate AI risks based on their likelihood and potential impact, making it easier to prioritize mitigation strategies. The NIST framework also includes Implementation Tiers - Partial, Risk-Informed, Repeatable, and Adaptive - that can serve as benchmarks for assessing your current maturity level and setting improvement goals.

"Balance AI's business potential with its risks." – Leia Manchanda, Field CISO, Oracle

Create adaptable policies that can evolve alongside advancements in AI technology and changes in regulations. Define clear escalation pathways and assign responsibilities for reporting AI risks promptly. Once these foundational steps are in place, focus on integrating these practices into your existing systems to ensure smoother operations.

Connecting the AI RMF to Current Systems

To align the NIST AI RMF with your existing workflows, integrate it into your current Software Development Life Cycle (SDLC) and cybersecurity frameworks. NIST provides "Crosswalks" that map AI RMF functions to existing standards like ISO/IEC 42001 and the NIST Cybersecurity Framework 2.0, which can help reduce redundant work.

Develop a unified control set by mapping the requirements of the NIST AI RMF and other frameworks into a single internal control structure. Embed AI risk management into everyday processes such as procurement and software development. Centralize all AI governance materials - policies, risk assessments, and control evidence - into one repository. Automating evidence collection through platforms that link policies to AI systems and track risks in real time can significantly reduce administrative efforts.

Once integrated, focus on continuous monitoring and process refinement to maintain effectiveness.

Tracking and Improving Risk Management

Continuous monitoring is key to managing AI risks effectively. Set up real-time systems to track model behavior, performance metrics, and emerging vulnerabilities. The NIST AI RMF Playbook, available in multiple formats like PDF, CSV, Excel, and JSON, offers actionable recommendations that can be seamlessly integrated into your workflows. Use this resource to compare your current practices to the framework's guidelines and address any gaps.

Invest in educating your workforce on AI ethics, risk management, and compliance across all departments. Leverage AI-driven tools to automate documentation, maintain detailed audit trails, and generate reports efficiently, reducing manual workloads. For example, in 2024, Synthesia adopted Wiz's AI-SPM to tackle alert fatigue and prioritize AI vulnerabilities. This allowed their engineering teams to independently resolve security issues while gaining comprehensive visibility into their AI infrastructure.

Track your progress using the Implementation Tiers and shift from periodic audits to continuous, automated monitoring. These practices align with the NIST AI RMF's emphasis on ongoing improvement. With 68% of companies reporting moderate to severe AI talent shortages, building internal expertise through training and automation has never been more critical.

Managing Specific AI Risks with the NIST AI RMF

Reducing Bias and Meeting Ethical Standards

The NIST AI RMF tackles bias through its four foundational functions - Govern, Map, Measure, and Manage - helping organizations identify and address unfair outcomes. NIST highlights three types of AI bias that need attention: systemic (rooted in organizational processes), statistical (caused by data quality issues), and cognitive (stemming from human judgment during development).

To address these biases, organizations should form cross-functional teams that include data scientists, legal advisors, and ethics experts. Before deploying new models, document your organization's tolerance for risks related to fairness and privacy. The framework also underscores the importance of Explainable AI, which ensures models are interpretable, making it easier to trace decision-making processes and pinpoint where bias might have crept in.

"When AI models are transparent, it becomes easier to understand their decision-making processes, identify sources of bias, and take corrective action." – RSI Security

Regular fairness audits are essential, especially for high-stakes applications like hiring or lending. Use specialized AI auditing tools and diversify training datasets to reflect inclusive populations, mitigating discriminatory patterns. Implement systems that continuously monitor for "data drift", which occurs when data patterns shift over time, potentially introducing new biases into previously balanced models. On July 26, 2024, NIST released NIST-AI-600-1, a profile specifically for managing risks in Generative AI, addressing the growing complexity of bias in these systems.

Protecting AI Systems from Security Threats

Addressing bias is critical, but securing AI systems from threats is equally important. Adopting a Zero Trust Architecture is a key step, ensuring that every access request to models or training data is verified. The NIST AI RMF's "Manage" function guides organizations in prioritizing risks through technical controls and procedural safeguards.

To bolster security, test models with adversarial inputs during training and deploy real-time systems capable of detecting manipulation attempts. Protect the AI data pipeline with encryption and strict access controls to guard against data poisoning. Strengthen deployment infrastructure with firewalls, intrusion detection systems, and regular updates to ensure secure operation.

"The NIST AI RMF is a guidance designed to improve the robustness and reliability of artificial intelligence by providing a systematic approach to managing risks." – Palo Alto Networks

Incorporate threat modeling into your software development lifecycle with a focus on AI-specific risks. Maintain an inventory of all AI systems to eliminate "shadow AI" - unauthorized systems operating without proper security oversight. Regular vulnerability scans and penetration testing can help uncover security gaps before they are exploited. Organizations are increasingly moving toward "Tier 4: Adaptive" implementation, which focuses on dynamic risk management to address evolving AI threats.

Maintaining Clear Documentation and Accountability

Once bias and security risks are addressed, clear documentation and accountability become essential for effective risk management. Comprehensive records of AI models, data sources, and decision-making processes ensure transparency and support ongoing monitoring and system improvements.

"Documentation can enhance transparency, improve human review processes, and bolster accountability in AI system teams." – NIST

Define clear roles and establish communication channels for managing AI risks across teams, aligning with the "Govern 2.1" principle of the NIST framework. Introduce feedback mechanisms for users and affected communities to report issues or challenge AI-driven decisions. Maintain a detailed inventory of all AI systems to enable consistent oversight based on organizational risk priorities. The framework's socio-technical approach emphasizes the importance of addressing social, legal, and ethical impacts alongside technical considerations.

Use the "Map" function to assess the potential societal impacts - both positive and negative - of an AI system before deployment. Ensure decision-making teams are diverse in expertise and demographics to identify hidden biases and emerging risks. While the NIST AI RMF is voluntary, its success depends on an organization's commitment, as there are no formal enforcement mechanisms. This makes strong internal accountability structures even more critical.

Conclusion and Next Steps

Why the NIST AI RMF Matters

The NIST AI RMF has become a cornerstone for managing AI risks in 2026, especially as governance increasingly determines the success or failure of AI initiatives. With private sector AI investments surpassing $100 billion in the U.S. during 2024, structured risk management is no longer optional. The framework tackles pressing issues like Shadow AI - where 77% of employees rely on generative AI at work, yet only 28% of organizations have established clear policies. This gap is not just a governance challenge; it’s a financial one too. Shadow AI-related data breaches cost an average of $670,000 more than typical breaches, putting both finances and reputations at risk.

Beyond internal risks, the framework also positions organizations to align with emerging regulations. For instance, under the EU AI Act, which comes into force in August 2026, non-compliance could lead to penalties as high as €35 million or 7% of global annual turnover. Meanwhile, poor data quality - a frequent challenge in AI governance - costs companies an estimated $15 million annually. The NIST AI RMF offers a dynamic, ongoing assurance model, replacing outdated 6-12 week risk workflows that simply can’t keep pace with AI’s rapid development cycles. These realities make it clear that organizations must act now to adapt their operations.

What AI Leaders Should Do Now

AI leaders need to act decisively to integrate the framework into their operations. Start by conducting a comprehensive AI inventory to document all AI systems in use. This includes foundational models, hosting environments, and data sensitivity levels. By doing so, you can address Shadow AI and gain a clear understanding of your organization’s AI footprint.

Next, form a cross-functional AI Governance Committee with representatives from legal, risk, IT, and data science teams. This ensures governance becomes a part of the AI development lifecycle. It’s also crucial to define your organization’s risk tolerance by setting clear thresholds, such as acceptable error rates, to balance strategic goals with operational limits.

To address risks like model hallucinations and ethical concerns, integrate human oversight into AI decision-making processes. Vendor contracts should also be updated to require transparency about data sources, training methods, and implemented risk controls. For organizations leveraging large language models, adopting the NIST-AI-600-1 Generative AI Profile - introduced in July 2024 - can help mitigate unique risks like data poisoning and hallucinations.

Moving Forward with AI Risk Management

Organizations that embrace governance and risk assessment early gain a competitive edge, building trust with customers, regulators, and stakeholders alike. Adjusting governance to match the level of risk allows innovation to flourish while maintaining essential safeguards. NIST has committed to a formal review of the framework by 2028, incorporating community feedback to ensure it evolves alongside AI advancements.

"By calibrating governance to the level of risk posed by each use case, it enables institutions to innovate at speed while balancing the risks - accelerating AI adoption while maintaining appropriate safeguards." – PwC

With 96% of leaders acknowledging that generative AI increases the risk of security breaches, it’s critical to prioritize oversight of high-risk systems first, then expand governance across the organization. The NIST AI RMF isn’t just about meeting compliance requirements - it’s about building sustainable AI systems that deliver long-term value while ensuring safety, fairness, and security.

FAQs

How does the NIST AI RMF support compliance with the EU AI Act?

The NIST AI Risk Management Framework (AI RMF) offers a structured, risk-focused approach that aligns well with the requirements outlined in the EU AI Act. This framework guides organizations in identifying, evaluating, addressing, and tracking AI-related risks throughout the lifecycle of their AI systems, helping ensure compliance with the Act's standards for high-risk AI applications.

The framework is built around four core functions: govern, map, measure, and manage. These functions help businesses classify their AI systems into the EU AI Act's risk categories - minimal, limited, high-risk, and prohibited. Additionally, it supports the creation of essential documentation and transparency reports. By bridging NIST guidelines with EU regulations, the framework simplifies compliance processes, eliminates unnecessary overlap, and promotes a streamlined approach to meeting regulatory demands.

How can organizations integrate the NIST AI RMF into their existing workflows?

Integrating the NIST AI Risk Management Framework (AI RMF) into your organization’s workflows requires a structured approach. Start by focusing on AI governance - this means assigning clear roles, drafting solid policies, and embedding trust in every AI project. Establishing governance ensures accountability and sets the stage for effective risk management.

Next, create an AI RMF profile tailored to your specific needs. Whether you're working on fraud detection, hiring, or another application, this customized profile will act as your roadmap for managing risks effectively.

As you move forward, make sure your processes align with the RMF’s core functions: Identify, Govern, Map, Measure, and Manage. These functions should guide every phase of the AI lifecycle, from initial design to ongoing evaluation. Along the way, keep a close eye on key metrics like data quality, model accuracy, and potential bias. Regular monitoring helps you catch and address risks before they escalate.

Lastly, maintain a thorough audit log. Documenting decisions, risk evaluations, and mitigation efforts not only ensures accountability but also supports regular reviews and continuous improvement. By following these steps, you can integrate the NIST AI RMF into your workflows effectively, strengthening both compliance and risk management.

How can organizations tackle issues like hallucinations in generative AI using the NIST AI RMF?

Organizations can tackle the issue of hallucinations in generative AI by applying the NIST AI Risk Management Framework (AI RMF). This framework provides a structured approach through risk identification, assessment, mitigation, and monitoring, helping to pinpoint hallucination risks and put effective measures in place.

To address these challenges, key steps include performing thorough testing, ensuring output validation, and integrating human-in-the-loop reviews to spot and fix errors. By following these practices, organizations can minimize the chances of hallucinations, making their generative AI systems more dependable.

Related Blog Posts

- NIST CSF Risk Management: 5 Key Steps

- How AI Is Changing Compliance Automation: 2025 Trends & Stats

- Best AI Security Frameworks for Organizations in 2026 (NIST & More)

- Comparing AI Frameworks: How to Decide if You Need One and Which One to Choose (Checklist Guide)

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How does the NIST AI RMF support compliance with the EU AI Act?","acceptedAnswer":{"@type":"Answer","text":"<p>The <strong>NIST AI Risk Management Framework (AI RMF)</strong> offers a structured, risk-focused approach that aligns well with the requirements outlined in the EU AI Act. This framework guides organizations in identifying, evaluating, addressing, and tracking AI-related risks throughout the lifecycle of their AI systems, helping ensure compliance with the Act's standards for high-risk AI applications.</p> <p>The framework is built around four core functions: <strong>govern</strong>, <strong>map</strong>, <strong>measure</strong>, and <strong>manage</strong>. These functions help businesses classify their AI systems into the EU AI Act's risk categories - minimal, limited, high-risk, and prohibited. Additionally, it supports the creation of essential documentation and transparency reports. By bridging NIST guidelines with EU regulations, the framework simplifies compliance processes, eliminates unnecessary overlap, and promotes a streamlined approach to meeting regulatory demands.</p>"}},{"@type":"Question","name":"How can organizations integrate the NIST AI RMF into their existing workflows?","acceptedAnswer":{"@type":"Answer","text":"<p>Integrating the NIST AI Risk Management Framework (AI RMF) into your organization’s workflows requires a structured approach. Start by focusing on <strong>AI governance</strong> - this means assigning clear roles, drafting solid policies, and embedding trust in every AI project. Establishing governance ensures accountability and sets the stage for effective risk management.</p> <p>Next, create an <strong>AI RMF profile</strong> tailored to your specific needs. Whether you're working on fraud detection, hiring, or another application, this customized profile will act as your roadmap for managing risks effectively.</p> <p>As you move forward, make sure your processes align with the RMF’s core functions: <strong>Identify, Govern, Map, Measure, and Manage</strong>. These functions should guide every phase of the AI lifecycle, from initial design to ongoing evaluation. Along the way, keep a close eye on <strong>key metrics</strong> like data quality, model accuracy, and potential bias. Regular monitoring helps you catch and address risks before they escalate.</p> <p>Lastly, maintain a thorough <strong>audit log</strong>. Documenting decisions, risk evaluations, and mitigation efforts not only ensures accountability but also supports regular reviews and continuous improvement. By following these steps, you can integrate the NIST AI RMF into your workflows effectively, strengthening both compliance and risk management.</p>"}},{"@type":"Question","name":"How can organizations tackle issues like hallucinations in generative AI using the NIST AI RMF?","acceptedAnswer":{"@type":"Answer","text":"<p>Organizations can tackle the issue of hallucinations in generative AI by applying the <strong>NIST AI Risk Management Framework (AI RMF)</strong>. This framework provides a structured approach through <strong>risk identification, assessment, mitigation, and monitoring</strong>, helping to pinpoint hallucination risks and put effective measures in place.</p> <p>To address these challenges, key steps include performing <strong>thorough testing</strong>, ensuring <strong>output validation</strong>, and integrating <strong>human-in-the-loop reviews</strong> to spot and fix errors. By following these practices, organizations can minimize the chances of hallucinations, making their generative AI systems more dependable.</p>"}}]}